In crypto trading, having complete data is not optional - it is foundational. A single missing data record can distort volumes, P&L, risk exposures, and regulatory reports. Yet, in high-throughput feeds, gaps are inevitable unless reconciled- so robust detection and timely remediation are essential for accurate reporting and control.

The Data Quality Challenge

Collecting complete market data from cryptocurrency exchanges is harder than it sounds. On paper, it's simple: connect to a WebSocket, receive trades, store them. In practice, we wrestle with unreliable connections, massive data volumes (millions of trades per day across hundreds of markets) and the need to deliver correct data to consumers in real-time.

Most data providers operate on a "best effort" basis. They stream data, maybe log disconnections, and hope for the best. When a client asks "do you have all the trades for BTC-USD on January 15th?", the honest answer is often "probably."

At Koinju, we took a different approach. We wanted provable completeness: the ability to mathematically demonstrate that for any given market and time range, we have captured every single trade. No gaps, no missing data, no "probably."

This post focuses on our reconciliation mechanism - the core innovation that enables provable completeness in real-time. We'll walk you through a real scenario, demonstrating how our different system components work together and interact with each other to deliver a robust reconciliation solution.

We use Coinbase as the implementation example, but the principles apply to any exchange that provides sequential trade IDs (e.g. Kraken, Bitstamp). For exchanges without sequential IDs, we use probabilistic candle-based validation (a topic for a future post).

The Scenario: A Network Interruption

Let's start with a real situation. Our WebSocket collector is streaming BTC-USD trades from Coinbase, when a brief network interruption occurs. The trades are inserted in the following order:

INSERT INTO public_data.trade (exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00512, 43250.00, '2025-12-16T10:10:43.870', '7175159', false),

('coinbase', 'BTC-USD', 'buy', 0.00128, 43251.00, '2025-12-16T10:10:43.872', '7175160', false),

('coinbase', 'BTC-USD', 'sell', 0.00256, 43249.50, '2025-12-16T10:10:43.874', '7175161', false),

('coinbase', 'BTC-USD', 'buy', 0.00064, 43252.00, '2025-12-16T10:10:43.880', '7175163', false)

INSERT INTO public_data.trade (exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00512, 43250.00, '2025-12-16T10:10:43.870', '7175159', false),

('coinbase', 'BTC-USD', 'buy', 0.00128, 43251.00, '2025-12-16T10:10:43.872', '7175160', false),

('coinbase', 'BTC-USD', 'sell', 0.00256, 43249.50, '2025-12-16T10:10:43.874', '7175161', false),

('coinbase', 'BTC-USD', 'buy', 0.00064, 43252.00, '2025-12-16T10:10:43.880', '7175163', false)

INSERT INTO public_data.trade (exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00512, 43250.00, '2025-12-16T10:10:43.870', '7175159', false),

('coinbase', 'BTC-USD', 'buy', 0.00128, 43251.00, '2025-12-16T10:10:43.872', '7175160', false),

('coinbase', 'BTC-USD', 'sell', 0.00256, 43249.50, '2025-12-16T10:10:43.874', '7175161', false),

('coinbase', 'BTC-USD', 'buy', 0.00064, 43252.00, '2025-12-16T10:10:43.880', '7175163', false)

Coinbase assigns sequential integer IDs to trades. The jump from 7175161 to 7175163 points to a clear issue: trade 7175162 is missing. Our application didn't crash, neither did it log an error- it simply never received that trade.

How do we detect this? How do we fix it? And how do we prove it's fixed?

Step 1: Storing Trades

All trades land in ClickHouse's trade table:

CREATE TABLE public_data.trade

(

exchange LowCardinality(String),

market LowCardinality(String),

side LowCardinality(String),

quantity Decimal(76, 20),

price Decimal(76, 20),

timestamp DateTime64(9, 'UTC'),

trade_id String,

fill_trade Bool,

created_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC')

)

ENGINE = MergeTree()

ORDER BY (exchange, market, timestamp)CREATE TABLE public_data.trade

(

exchange LowCardinality(String),

market LowCardinality(String),

side LowCardinality(String),

quantity Decimal(76, 20),

price Decimal(76, 20),

timestamp DateTime64(9, 'UTC'),

trade_id String,

fill_trade Bool,

created_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC')

)

ENGINE = MergeTree()

ORDER BY (exchange, market, timestamp)CREATE TABLE public_data.trade

(

exchange LowCardinality(String),

market LowCardinality(String),

side LowCardinality(String),

quantity Decimal(76, 20),

price Decimal(76, 20),

timestamp DateTime64(9, 'UTC'),

trade_id String,

fill_trade Bool,

created_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC')

)

ENGINE = MergeTree()

ORDER BY (exchange, market, timestamp)The fill_trade boolean will become important later as it distinguishes original WebSocket trades from backfilled ones.

At this point, our table has 4 trades, while in reality it should have 5. The gap exists silently.

Step 2: Detecting the Gap (T+5 seconds)

A Refreshable Materialized View runs every 5 seconds, scanning for sequence breaks:

CREATE MATERIALIZED VIEW public_data.sequence_discontinuity_mv

REFRESH EVERY 5 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT * EXCEPT diff_with_start_id

FROM (

SELECT

market,

exchange,

lagInFrame(trade_id, 1, trade_id - 1) OVER w AS start_id,

trade_id AS end_id,

lagInFrame(timestamp, 1, timestamp) OVER w AS start_timestamp,

timestamp AS end_timestamp,

multiIf(

diff_with_start_id > 1, 'gap',

duplicated_trades = 1, 'duplicate',

'unknown'

) AS type,

trade_id - start_id AS diff_with_start_id,

if(diff_with_start_id > 0, diff_with_start_id - 1, 0) AS nb_missing_trades,

toUInt8(diff_with_start_id = 0) AS duplicated_trades

FROM (

SELECT market, exchange, toInt64OrZero(trade_id) AS trade_id, timestamp

FROM public_data.trade

WHERE timestamp > now64(9, 'UTC') - INTERVAL 10 MINUTE

)

WINDOW w AS (PARTITION BY market, exchange ORDER BY trade_id)

)

WHERE diff_with_start_id != 1

CREATE MATERIALIZED VIEW public_data.sequence_discontinuity_mv

REFRESH EVERY 5 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT * EXCEPT diff_with_start_id

FROM (

SELECT

market,

exchange,

lagInFrame(trade_id, 1, trade_id - 1) OVER w AS start_id,

trade_id AS end_id,

lagInFrame(timestamp, 1, timestamp) OVER w AS start_timestamp,

timestamp AS end_timestamp,

multiIf(

diff_with_start_id > 1, 'gap',

duplicated_trades = 1, 'duplicate',

'unknown'

) AS type,

trade_id - start_id AS diff_with_start_id,

if(diff_with_start_id > 0, diff_with_start_id - 1, 0) AS nb_missing_trades,

toUInt8(diff_with_start_id = 0) AS duplicated_trades

FROM (

SELECT market, exchange, toInt64OrZero(trade_id) AS trade_id, timestamp

FROM public_data.trade

WHERE timestamp > now64(9, 'UTC') - INTERVAL 10 MINUTE

)

WINDOW w AS (PARTITION BY market, exchange ORDER BY trade_id)

)

WHERE diff_with_start_id != 1

CREATE MATERIALIZED VIEW public_data.sequence_discontinuity_mv

REFRESH EVERY 5 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT * EXCEPT diff_with_start_id

FROM (

SELECT

market,

exchange,

lagInFrame(trade_id, 1, trade_id - 1) OVER w AS start_id,

trade_id AS end_id,

lagInFrame(timestamp, 1, timestamp) OVER w AS start_timestamp,

timestamp AS end_timestamp,

multiIf(

diff_with_start_id > 1, 'gap',

duplicated_trades = 1, 'duplicate',

'unknown'

) AS type,

trade_id - start_id AS diff_with_start_id,

if(diff_with_start_id > 0, diff_with_start_id - 1, 0) AS nb_missing_trades,

toUInt8(diff_with_start_id = 0) AS duplicated_trades

FROM (

SELECT market, exchange, toInt64OrZero(trade_id) AS trade_id, timestamp

FROM public_data.trade

WHERE timestamp > now64(9, 'UTC') - INTERVAL 10 MINUTE

)

WINDOW w AS (PARTITION BY market, exchange ORDER BY trade_id)

)

WHERE diff_with_start_id != 1

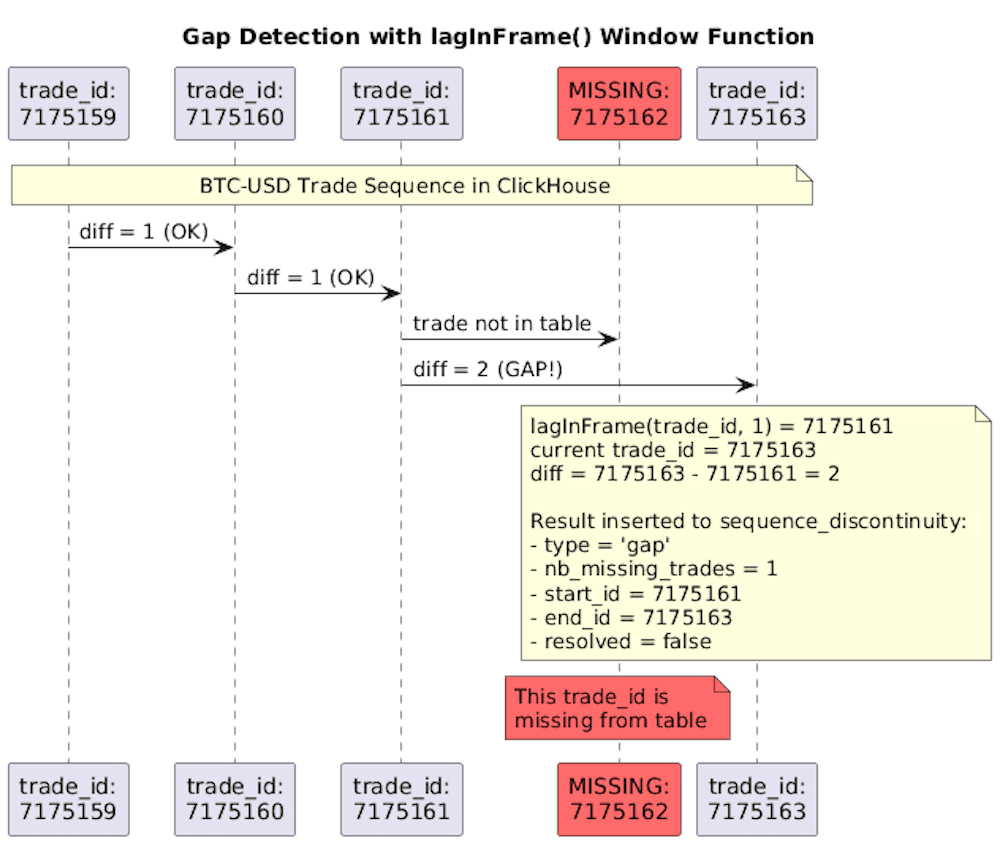

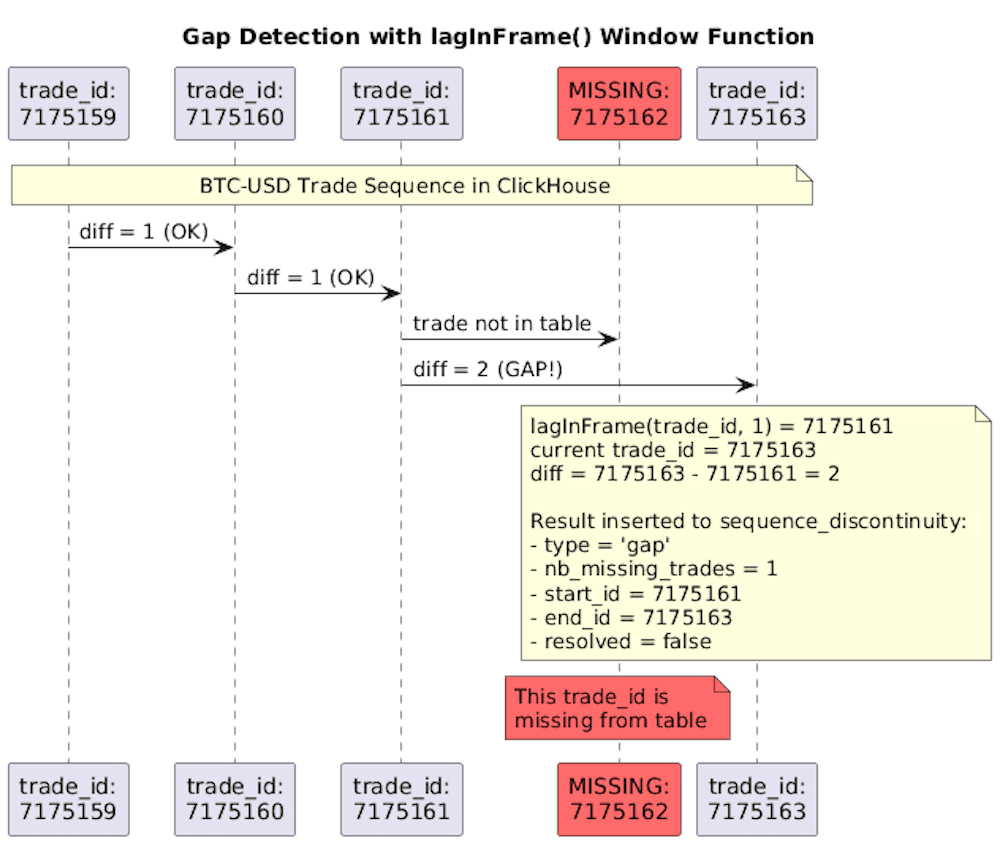

How It Detects Our Gap

The key is the lagInFrame() window function. For each trade, it looks at the previous trade_id in the same market:

When the MV processes trade 7175163, it calculates:

Previous trade_id (via lagInFrame): 7175161

Current trade_id: 7175163

Difference: 7175163 - 7175161 = 2

A difference of 2 means one trade is missing. The MV inserts a row into sequence_discontinuity:

SELECT market, start_id, end_id, type, nb_missing_trades, resolved

FROM public_data.sequence_discontinuity

WHERE market = 'BTC-USD';

SELECT market, start_id, end_id, type, nb_missing_trades, resolved

FROM public_data.sequence_discontinuity

WHERE market = 'BTC-USD';

SELECT market, start_id, end_id, type, nb_missing_trades, resolved

FROM public_data.sequence_discontinuity

WHERE market = 'BTC-USD';

The gap is now detected and recorded without any application code, without any scheduled job, purely within ClickHouse.

The Sequence Discontinuity Table

Detected gaps are stored in a table designed for self-healing:

CREATE TABLE public_data.sequence_discontinuity

(

market LowCardinality(String),

exchange LowCardinality(String),

start_id Int64,

end_id Int64,

start_timestamp DateTime64(9, 'UTC'),

end_timestamp DateTime64(9, 'UTC'),

type LowCardinality(String),

nb_missing_trades Int64,

detected_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC'),

resolved_at Nullable(DateTime64(9, 'UTC')) DEFAULT null,

resolved Boolean MATERIALIZED resolved_at IS NOT NULL

)

ENGINE = ReplacingMergeTree(resolved)

ORDER BY (exchange, market, start_id, end_id)

CREATE TABLE public_data.sequence_discontinuity

(

market LowCardinality(String),

exchange LowCardinality(String),

start_id Int64,

end_id Int64,

start_timestamp DateTime64(9, 'UTC'),

end_timestamp DateTime64(9, 'UTC'),

type LowCardinality(String),

nb_missing_trades Int64,

detected_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC'),

resolved_at Nullable(DateTime64(9, 'UTC')) DEFAULT null,

resolved Boolean MATERIALIZED resolved_at IS NOT NULL

)

ENGINE = ReplacingMergeTree(resolved)

ORDER BY (exchange, market, start_id, end_id)

CREATE TABLE public_data.sequence_discontinuity

(

market LowCardinality(String),

exchange LowCardinality(String),

start_id Int64,

end_id Int64,

start_timestamp DateTime64(9, 'UTC'),

end_timestamp DateTime64(9, 'UTC'),

type LowCardinality(String),

nb_missing_trades Int64,

detected_at DateTime64(9, 'UTC') DEFAULT now64(9, 'UTC'),

resolved_at Nullable(DateTime64(9, 'UTC')) DEFAULT null,

resolved Boolean MATERIALIZED resolved_at IS NOT NULL

)

ENGINE = ReplacingMergeTree(resolved)

ORDER BY (exchange, market, start_id, end_id)

The ReplacingMergeTree(resolved) engine is crucial: when we later mark a gap as resolved, ClickHouse will deduplicate and keep only the resolved version.

Step 3: Resolving the Gap (T+6 seconds)

Our cryptovalidator service polls for unresolved gaps:

SELECT * FROM sequence_discontinuity

WHERE type = 'gap' AND NOT

SELECT * FROM sequence_discontinuity

WHERE type = 'gap' AND NOT

SELECT * FROM sequence_discontinuity

WHERE type = 'gap' AND NOT

It finds our gap and fetches the missing trade from Coinbase's REST API:

Coinbase returns trade 7175162. The validator inserts it with fill_trade=true:

INSERT INTO public_data.trade

(exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00192, 43250.50, '2025-12-16T10:10:43.876', '7175162', true)

INSERT INTO public_data.trade

(exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00192, 43250.50, '2025-12-16T10:10:43.876', '7175162', true)

INSERT INTO public_data.trade

(exchange, market, side, quantity, price, timestamp, trade_id, fill_trade)

VALUES

('coinbase', 'BTC-USD', 'sell', 0.00192, 43250.50, '2025-12-16T10:10:43.876', '7175162', true)

The missing trade is now in our table. But how do we know the gap is truly fixed?

Step 4: Verifying Resolution (T+8 seconds)

A second Refreshable Materialized View continuously checks if gaps have been filled:

CREATE MATERIALIZED VIEW public_data.filled_sequence_discontinuity_mv

REFRESH EVERY 2 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT

* EXCEPT resolved_at,

now64(9, 'UTC') AS resolved_at

FROM public_data.sequence_discontinuity sd

WHERE sd.type = 'gap' AND NOT sd.resolved

AND (

SELECT count(DISTINCT toInt64OrZero(trade_id))

FROM public_data.trade t

WHERE t.exchange = sd.exchange

AND t.market = sd.market

AND toInt64OrZero(t.trade_id) > sd.start_id

AND toInt64OrZero(t.trade_id) < sd.end_id

)

CREATE MATERIALIZED VIEW public_data.filled_sequence_discontinuity_mv

REFRESH EVERY 2 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT

* EXCEPT resolved_at,

now64(9, 'UTC') AS resolved_at

FROM public_data.sequence_discontinuity sd

WHERE sd.type = 'gap' AND NOT sd.resolved

AND (

SELECT count(DISTINCT toInt64OrZero(trade_id))

FROM public_data.trade t

WHERE t.exchange = sd.exchange

AND t.market = sd.market

AND toInt64OrZero(t.trade_id) > sd.start_id

AND toInt64OrZero(t.trade_id) < sd.end_id

)

CREATE MATERIALIZED VIEW public_data.filled_sequence_discontinuity_mv

REFRESH EVERY 2 SECOND APPEND

TO public_data.sequence_discontinuity

AS

SELECT

* EXCEPT resolved_at,

now64(9, 'UTC') AS resolved_at

FROM public_data.sequence_discontinuity sd

WHERE sd.type = 'gap' AND NOT sd.resolved

AND (

SELECT count(DISTINCT toInt64OrZero(trade_id))

FROM public_data.trade t

WHERE t.exchange = sd.exchange

AND t.market = sd.market

AND toInt64OrZero(t.trade_id) > sd.start_id

AND toInt64OrZero(t.trade_id) < sd.end_id

)

This correlated subquery syntax is available in Clickhouse 25.10 and later.

This MV asks: "For each unresolved gap, do all the missing trade_ids now exist?"

For our gap (start_id=7175161, end_id=7175163, nb_missing_trades=1), it counts trades where trade_id > 7175161 AND trade_id < 7175163. It finds one trade (7175162) - which equals nb_missing_trades.

The gap is filled! The MV inserts a new row with resolved_at = now():

Self-Healing via ReplacingMergeTree

Now we have two rows for the same gap:

Original: (start_id=7175161, end_id=7175163, resolved=false)

New: (start_id=7175161, end_id=7175163, resolved=true, resolved_at='2025-12-16 10:10:52')

Because we use ReplacingMergeTree(resolved) with the same ORDER BY key, ClickHouse keeps the row where resolved=true during background merges.

Query with FINAL to see the deduplicated state:

SELECT market, start_id, end_id, resolved, resolved_at

FROM public_data.sequence_discontinuity FINAL

WHERE market = 'BTC-USD';

SELECT market, start_id, end_id, resolved, resolved_at

FROM public_data.sequence_discontinuity FINAL

WHERE market = 'BTC-USD';

SELECT market, start_id, end_id, resolved, resolved_at

FROM public_data.sequence_discontinuity FINAL

WHERE market = 'BTC-USD';

The entire cycle,detection, resolution, verification,completed in under 10 seconds, entirely automatically.

Proving Completeness

For any market with sequential trade_ids, we can at any time prove completeness:

SELECT

market,

min(toInt64OrZero(trade_id)) AS first_id,

max(toInt64OrZero(trade_id)) AS last_id,

uniqExact(trade_id) AS actual_trades,

last_id - first_id + 1 AS expected_trades,

actual_trades = expected_trades AS is_complete

FROM public_data.trade

WHERE exchange = 'coinbase'

GROUP BY

SELECT

market,

min(toInt64OrZero(trade_id)) AS first_id,

max(toInt64OrZero(trade_id)) AS last_id,

uniqExact(trade_id) AS actual_trades,

last_id - first_id + 1 AS expected_trades,

actual_trades = expected_trades AS is_complete

FROM public_data.trade

WHERE exchange = 'coinbase'

GROUP BY

SELECT

market,

min(toInt64OrZero(trade_id)) AS first_id,

max(toInt64OrZero(trade_id)) AS last_id,

uniqExact(trade_id) AS actual_trades,

last_id - first_id + 1 AS expected_trades,

actual_trades = expected_trades AS is_complete

FROM public_data.trade

WHERE exchange = 'coinbase'

GROUP BY

If is_complete = true, we have every trade in the sequence. No probabilistic sampling or unreliable volume-candle based on mathematical certainty.

Key Differentiators

Database-Native Gap Detection

Traditional approaches poll for gaps using scheduled jobs in application code. Our approach pushes detection into the database itself:

No external scheduler to manage or monitor

Scales with ClickHouse, not with application instances

Independent of acquisition layer—works regardless of how trades arrive or if validation infrastructure restarts

Self-Healing Architecture

The combination of Refreshable MVs and ReplacingMergeTree creates a system that:

Detects its own problems

Triggers its own repairs

Verifies its own fixes

Maintains an audit trail of every gap and resolution

Conclusion

By pushing gap detection into ClickHouse's refreshable materialized views, we've built a reconciliation system that:

Detects sequence breaks within seconds of occurrence

Repairs gaps automatically via REST API backfill

Verifies resolution through continuous monitoring

Proves completeness through sequence validation

This approach decouples verification from acquisition, allowing us to scale and maintain high data quality with minimal operational overhead.

The system works for any exchange with sequential trade IDs. For exchanges without this property, we use probabilistic candle-based validation – a topic for a future post.

In the next post, we'll explore how we aggregate these trades into candles using ClickHouse's aggregation functions, and how clients query this data directly via SQL.

This is Part 1 of a 3-part series on cryptocurrency market data infrastructure. Part 2 will cover storage and aggregation of trades into candles and indexes with ClickHouse. Part 3 will cover data distribution and client access.

Want to see it in action?

Our API provides provable completeness for 25+ exchanges.

For developers: Try our API → docs.koinju.io

For fund administrators: See how we handle your reconciliation → Book a demo!

Questions on your specific use case? → Contact us!